Working with log files in Emacs

Table of Contents

| Author | Lee Hinman (lee@elastic.co) |

| Date | 2017-09-28 16:03:13 |

Introduction

Hi I'm Lee, I work on Elasticsearch.

As a developer of an application that generates quite a few logs, as being quite popular for log

ingestion and analysis, I frequently find that I spend time pouring over logs, whether they be local

logs from some new features of bugfix I'm trying to reproduce, customer support logs trying to

determine what is going on in a system, or build failure logs from a gradle test run. Through

these trying times I find that Emacs can be an invaluable tool for wading through logs.

In this article, I'll share some of the things I find useful for searching through logs. They'll generally be Elasticsearch logs, but the same tools can be used for other applications, it's a pretty neutral topic.

By "log", I usually mean anything ending in .log, though quite a bit of this applies to reading any long record, even structured things like .json files, I'll cover this more as I come across it.

Reading logs

The number one thing that I expect someone would want to do with logs is to make them readable. "Readable" means a few different things to different people, so this may not hit 100% of the mark, but there are definitely some things you can do to make logs more readable.

Logs that are undergoing writes

I commonly use tail -F for monitoring logs on Linux systems, and Emacs has something like that

also. To watch a file for changes and update the buffer automatically, open the file and then invoke

M-x auto-revert-tail-mode. Emacs will then behave just like tail -f for the currently open file.

Dealing with very large log files

50mb? Okay, no problem.

342mb? Sure, Emacs can handle that.

2.7gb? Well, maybe that is getting a bit large for a single buffer, especially when we want to add searching and all that to the mix. It's not that Emacs can't handle it, it's just that some operations you want to do may be a bit slower than you'd like.

Enter vlf, vlf is short for "View Large Files" and is a very nice way to handle viewing extremely large files in Emacs, not just log files. I've used it successfully for reading log files over 10 gigabytes. I'll leave it to you to read the page about the features it provides, but suffice it to say that it breaks up large files into manageable chunks, and then provides tools to operate on either a small chunk, or across all the chunks of a very large file.

Install vlf, and try to open a 1.2gb log file and you'll get a prompt that says

File my_big_log.log is large (1.2G): open normally (o), open with vlf (v) or abort (a)

You can then hit either o, v, or a depending on what you'd like to do. You can even configure

the limit at which it asks you by configuring the large-file-warning-threshold setting.

Once you open a file with VLF, you can scroll batches by either going to the bottom/top of the chunk and

then going to the next/previous line, or you can use C-c C-v and then n or p to go to the next

or previous chunk. VLF's bindings all start with the C-c C-v prefix, so some of the helpful ones

are:

C-c C-v sandC-c C-v rfor searching forward and backward through the fileC-c C-v obuilds an "occur" index over the entire file. We'll talk more about occur in the "searching" sectionC-c C-v ljump to a particular line in the big file

You can even edit the file, saving only the batch/chunk you're currently in.

There are even more features, so check out the VLF page for the full documentation if you routinely work with large files.

Using a major mode

Since Elasticsearch is a Java application, it uses logs that are in one of those formats that is common to most other Java applications, the log4j format.

There are a few different options, depending on what sort of logs you are viewing and what format they're in, they're log4j-mode1 which is stripped down and doesn't handle much other than syntax highlighting for different log levels, as well as rudimentary support for filtering included and excluded content from the log file in question. At the time of this writing the site is down, but you can still download the library from MELPA.

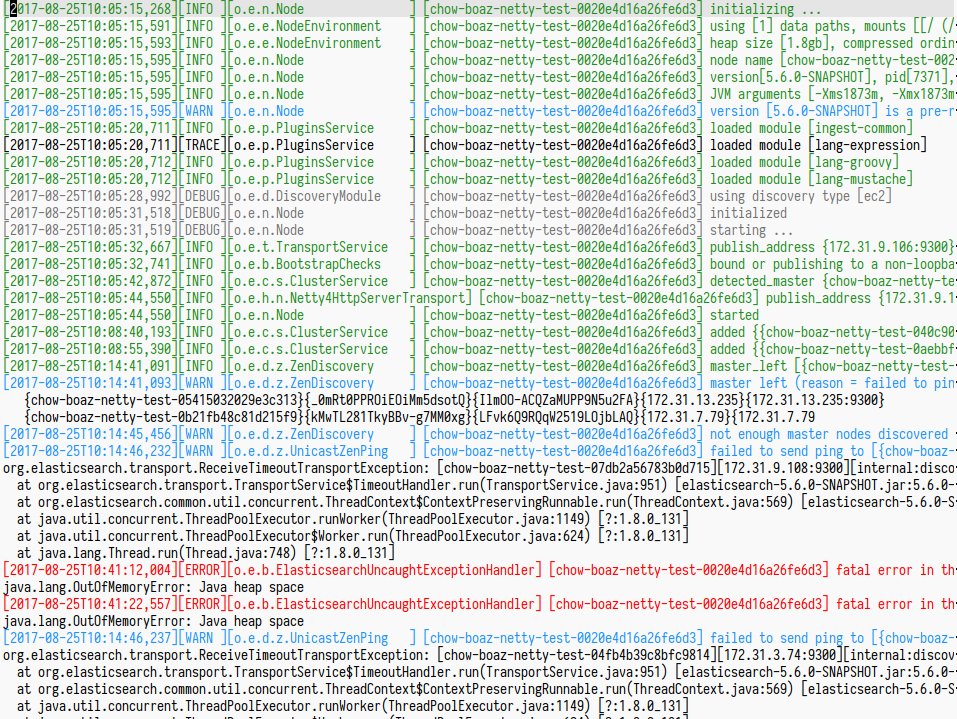

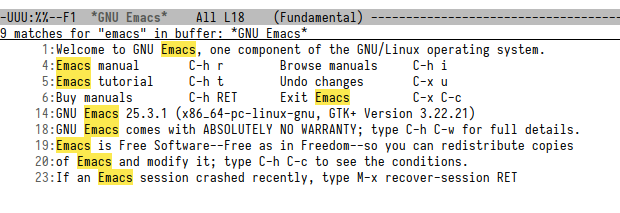

Figure 1: An example of what log4j-mode looks like

Along the same lines (focused on a particular format), there is rails-log-mode which is designed for Rails logs, however, I cannot comment on it as I don't use Rails.

Finally, on the more generic side there is a mode called Logview that provides a more feature-rich

experience for viewing logs. At the time of this writing though, it doesn't support the timestamp

format that ES uses (ISO-8601 It also handles ES' timestamp format.

Logview has a lot of features, however I tend to stick with my regular editing habits. I hope to

change this in the future though, so give it a shot if it looks interesting to you.

yyyy-MM-dd'T'HH:mm:ss,SSS)

I personally stick with log4j-mode, even though it doesn't have many features other than syntax

highlighting. There are a few things you can configure other than faces, and you can jump between

log messages with M-} and M-{, but generally we don't look at major modes for log mining

features.

Sometimes when viewing a file, you don't have enough vertical space. To alleviate this, Emacs has a

feature called follow-mode. If you split the buffer vertically with C-x 3 and then invoke M-x

follow-mode Emacs will treat the pane on the left as the "top" of the buffer and the pane on the

right as the "bottom", making the viewing part essentially twice as high. If you scroll in one pane

the other pane will follow. It's great for viewing very large files as well as large programming

methods!

Helpful minor modes

Okay, there aren't a great number of helpful major modes, but we do have a lot of minor modes to help out with reading the logs, some that are built in, and some that aren't.

I find that line numbers aren't very helpful for reading logs, but I do find that hl-line-mode is

invaluable for highlighting the current line you are viewing in the logs. There's also

global-hl-line-mode if you want it everywhere. hl-line is built in to Emacs, so there's nothing

you'll need to download/install to use it.

It's uncommon in my experience to want to edit the log while viewing it (though you may want to

delete lines from the log), so in that case, I heartily recommend view-mode. Another built-in

mode, View mode is designed for scanning buffers by the screenful (just what we want to do with

logs!). It helpfully allows using a different keymap, so that you can switch to more "navigation"

style keybindings. It's easy to invoke, simply use M-x view-mode while on a file; I have the

following for my log4j-mode hooks

(use-package log4j-mode :ensure t :disabled t :init (add-hook #'log4j-mode-hook #'view-mode) (add-hook #'log4j-mode-hook #'read-only-mode) (add-hook #'log4j-mode-hook 'eos/turn-on-hl-line))

And in my customized view-mode bindings

(use-package view :config (defun View-goto-line-last (&optional line) "goto last line" (interactive "P") (goto-line (line-number-at-pos (point-max)))) (define-key view-mode-map (kbd "e") 'View-scroll-half-page-forward) (define-key view-mode-map (kbd "u") 'View-scroll-half-page-backward) ;; less like (define-key view-mode-map (kbd "N") 'View-search-last-regexp-backward) (define-key view-mode-map (kbd "?") 'View-search-regexp-backward?) (define-key view-mode-map (kbd "g") 'View-goto-line) (define-key view-mode-map (kbd "G") 'View-goto-line-last) ;; vi/w3m like (define-key view-mode-map (kbd "h") 'backward-char) (define-key view-mode-map (kbd "j") 'next-line) (define-key view-mode-map (kbd "k") 'previous-line) (define-key view-mode-map (kbd "l") 'forward-char))

I primarily view logs by scrolling half-pages, so I can keep context more easily. If "e" and "u" seem like strange choices to you, it's because I type with a Dvorak keyboard layout and those keys are where "d" and "f" would be on a regular Qwerty keyboard.

As an alternative to view-mode, I have my own Hydra state that I use for navigation when I don't

feel like holding down modifiers (I am not an EVIL user, so this may not apply if you are)

(defhydra eos/nav-mode (:foreign-keys run) "[NAV-MODE] q or i to exit" ("C-h" hl-line-mode) ("t" toggle-truncate-lines) ("a" beginning-of-line) ("l" forward-char) ("h" backward-char) ("n" next-line) ("j" next-line) ("p" previous-line) ("k" previous-line) ("e" View-scroll-half-page-forward) ("u" View-scroll-half-page-backward) ("SPC" scroll-up-command) ("S-SPC" scroll-down-command) ("<" beginning-of-buffer) (">" end-of-buffer) ("." end-of-buffer) ("C-'" nil) ("d" (when (y-or-n-p "Kill buffer?") (kill-this-buffer)) :exit t) ("/" isearch-forward-regexp :exit t) ("?" isearch-backward-regexp :exit t) ("i" nil :exit t) ("q" nil :exit t))

I bind this to M-V2, so I can easily invoke it

in any buffer to enter a navigation-like mode, with some keybindings to make it easier to scan

through pages and pages of logs.

Hiding parts of the log file

Sometimes you want to limit the scope of what you're reading, say, between a couple of timestamps. There are a number of ways to do this, but one way that is quite common in Emacs is narrowing. When narrowing, only the text you want will be displayed in the buffer.

I recommend Endless Parentheses' narrow-or-widen-dwim for this, because it will allow you to use a

single binding for narrowing and widening (undoing the narrow), as well as some nice criteria that

works outside of log viewing as well. For simplicity's and future-proofing sake I'll reproduce the

one I use here, but visit the original page to see the full explanation.

(defun eos/narrow-or-widen-dwim (p) "Widen if buffer is narrowed, narrow-dwim otherwise. Dwim means: region, org-src-block, org-subtree, or defun, whichever applies first. Narrowing to org-src-block actually calls `org-edit-src-code'. With prefix P, don't widen, just narrow even if buffer is already narrowed." (interactive "P") (declare (interactive-only)) (cond ((and (buffer-narrowed-p) (not p)) (widen)) ((region-active-p) (narrow-to-region (region-beginning) (region-end))) ((derived-mode-p 'org-mode) ;; `org-edit-src-code' is not a real narrowing ;; command. Remove this first conditional if ;; you don't want it. (cond ((ignore-errors (org-edit-src-code) t) (delete-other-windows)) ((ignore-errors (org-narrow-to-block) t)) (t (org-narrow-to-subtree)))) ((derived-mode-p 'latex-mode) (LaTeX-narrow-to-environment)) (t (narrow-to-defun))))

I bind mine to C-x C-n so it's easy to hit

(global-set-key (kbd "C-x C-n") #'eos/narrow-or-widen-dwim)

Since this works on a highlighted region, you can select the text you want to limit your actions on,

and then hit C-x C-n to shrink the buffer down to just that text. Do whatever analysis you need

to, and then hit C-x C-n again to widen back to the big file.

Figure 2: A log buffer with text selected prior to narrowing

Figure 3: The same buffer after narrowing to the selected region

Want to have two copies of the buffer, on that is narrowed and one that's un-narrowed? Create an

indirect buffer! Hit C-x 4 c to "clone" the buffer, you can then narrow each copy independently,

while keeping them synchronized for other actions (like filtering or modifying).

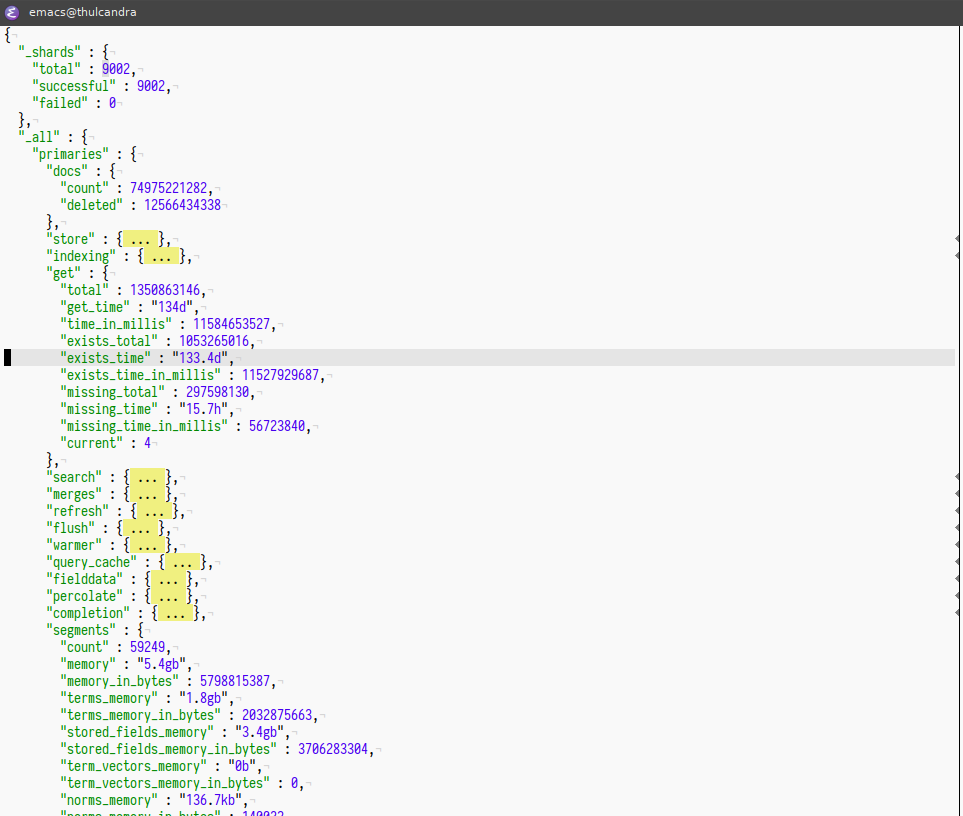

Hiding structured data

In addition to regular log files, I deal a lot with structured log-like data, almost entirely in JSON format. In addition to the regulars like json-mode and json-reformat for just viewing the data, sometimes it's helpful to be able to hide certain parts of the structured data. This is something that Emacs' built-in Hideshow3 is great at.

Before describing it, here's a minimal configuration for it:

(use-package hideshow :bind (("C-c TAB" . hs-toggle-hiding) ("C-\\" . hs-toggle-hiding) ("M-+" . hs-show-all)) :init (add-hook #'prog-mode-hook #'hs-minor-mode) :diminish hs-minor-mode :config (setq hs-special-modes-alist (mapcar 'purecopy '((c-mode "{" "}" "/[*/]" nil nil) (c++-mode "{" "}" "/[*/]" nil nil) (java-mode "{" "}" "/[*/]" nil nil) (js-mode "{" "}" "/[*/]" nil) (json-mode "{" "}" "/[*/]" nil) (javascript-mode "{" "}" "/[*/]" nil)))))

The main way I use this is to put the point (represented as | in the code below) inside of an

expression and then hit C-c TAB or C-\, which changes

{

"foo": {|

"bar": "baz"

},

"eggplant": 11

}

Into

{

"foo": { ... },

"eggplant": 11

}

Hitting C-c TAB or C-\ on the block again shows the block. This is especially nice when you have

gigantic JSON files that you want to see different parts of at the same time, as seen below.

Figure 4: An example of Hideshow collapsing part of a JSON buffer

There are a whole bunch of ways to make this nice, I especially recommend reading the manual for

Hideshow and also checking out hs-hide-level where you can hide everything at a certain nested

level.

Finally, if you want to go all-in for a hierarchical view of JSON data, I'd recommend json-navigator.

Searching through logs

Probably the number two thing that people want to do with logs (other than ignore them, of course), is to search them. Looking for that needle in the multi-megabyte haystack.

Yes, I'm aware there are tools for this. I work for the company that is behind the Elastic Stack which people use for log search and analysis. This article is about Emacs though, so we'll keep our searching within the editor4.

Let's start with the basics then, searching is bound to C-s (forwards) and C-r (backwards),

however, I find that regexp searching by default is a little nicer, so I bind the following

(global-set-key (kbd "C-s") 'isearch-forward-regexp) (global-set-key (kbd "C-r") 'isearch-backward-regexp) (global-set-key (kbd "C-M-s") 'isearch-forward) (global-set-key (kbd "C-M-r") 'isearch-backward)

So now regular expressions are used for the default search. I'd also recommend that if you deal with

multi-lingual stuff that you do (setq search-default-mode 'character-fold-to-regexp) so that you

can search for accented characters with their ASCII equivalents.

Next, let's get a little fancier with our searching but doing an "occur" search, there are a number of different ways to do this:

Occurrences within Isearch

Search for something with C-s, while the search is active hit M-x isearch-occur to transform the

search into the "occur" equivalent, you'll see a new buffer pop up with the matches highlighted,

this is like a search results page, where you can scroll through and hit RET on each entry to jump

to that line.

You can also activate this directly from a running isearch by using the following snippet, which

binds it to C-o:

;; Activate occur easily inside isearch (define-key isearch-mode-map (kbd "C-o") (lambda () (interactive) (let ((case-fold-search isearch-case-fold-search)) (occur (if isearch-regexp isearch-string (regexp-quote isearch-string))))))

If you remember back in the "Dealing with large files" section I mentioned VLF, VLF has its own

"occur" implementation for doing this same occur search in a gigantic file. It's bound to C-c C-v

o.

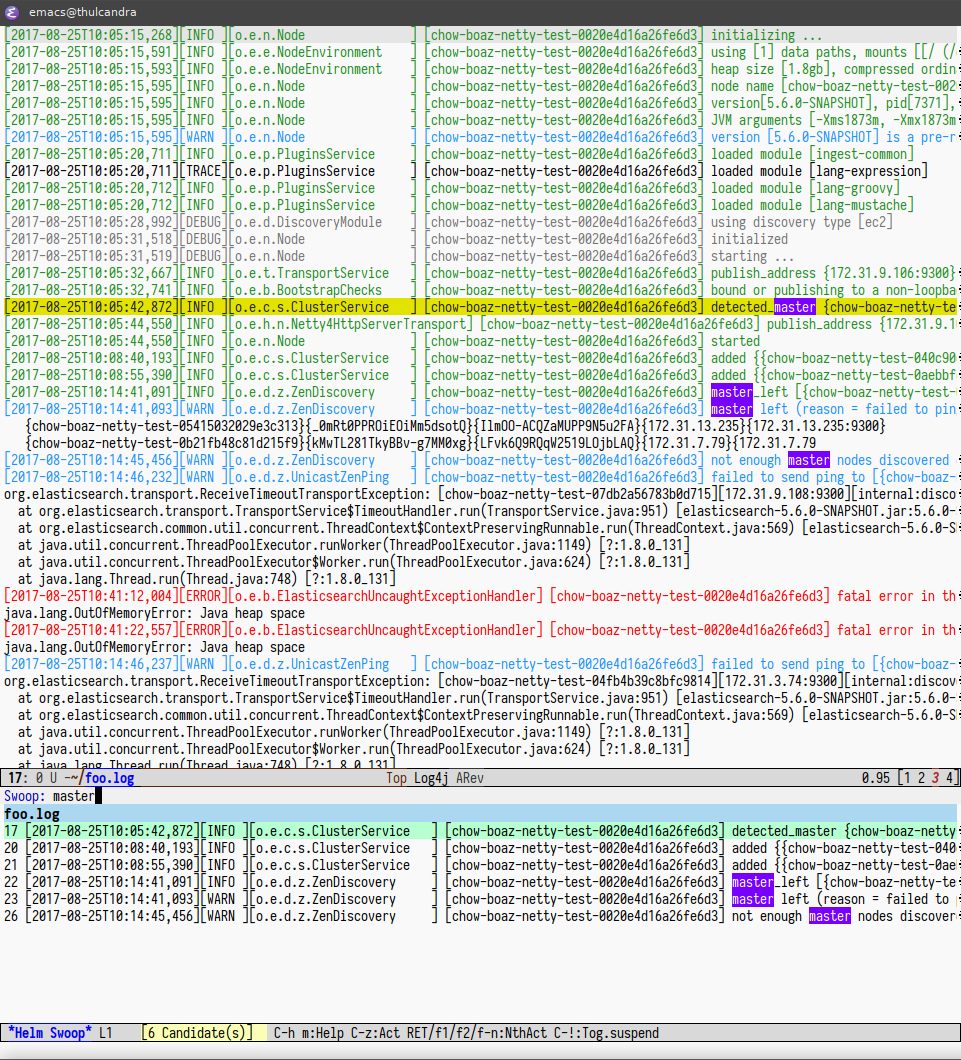

Using Helm

The second way to do this is to use Helm. Helm is an incremental narrowing framework, and while I

don't have the space to talk about it in entirety here (you can check out a presentation I gave on

Helm if you want), it does provide a nice helm-occur function. I bind mine to C-x o for doing

occur across a file.

A nice alternative to helm-occur that still uses helm is the helm-swoop package. A nice binding as

M-i, you can even transition from a regular C-s search to swoop with the following

configuration:

(use-package helm-swoop :ensure t :bind (("M-i" . helm-swoop) ("M-I" . helm-swoop-back-to-last-point) ("C-c M-i" . helm-multi-swoop)) :config ;; When doing isearch, hand the word over to helm-swoop (define-key isearch-mode-map (kbd "M-i") 'helm-swoop-from-isearch) ;; From helm-swoop to helm-multi-swoop-all (define-key helm-swoop-map (kbd "M-i") 'helm-multi-swoop-all-from-helm-swoop) ;; Save buffer when helm-multi-swoop-edit complete (setq helm-multi-swoop-edit-save t ;; If this value is t, split window inside the current window helm-swoop-split-with-multiple-windows t ;; Split direcion. 'split-window-vertically or 'split-window-horizontally helm-swoop-split-direction 'split-window-vertically ;; don't auto select the thing at point helm-swoop-pre-input-function (lambda () "") ;; If nil, you can slightly boost invoke speed in exchange for text ;; color. If I want pretty I'll use helm-occur since it keeps colors helm-swoop-speed-or-color nil))

Figure 6: Using helm-swoop to get an interactive search result list

Using Swiper

Use Swiper. Swiper is like Helm, but for the Ivy crowd. I don't have a configuration I can share since I don't use Ivy, but if you do, check it out. Everyone has their favorite so it's worth checking them all out to see which you like the most.

I can show you a screenshot of Swiper searching a log though.

External searching tools

While Emacs' searching tools are pretty powerful, sometimes you want to use an external tool because

you're more familiar with it, or it has some feature you really want. For example, I often use ag

(The Silver Searcher)5 or

even regular grep for some queries.

Again, a lot of these are centered around Helm, but you don't have to go 100% into Helm to use them, just install the mode and play around!

Helm-ag allows you to search within the local directory, or the current file. If you invoke

helm-ag you can search everything in the local directory, popping up a buffer similar to

helm-swoop or occur. If you only want to search the file you're currently viewing,

helm-ag-this-file has got you covered.

If, instead of the swoop/occur-like buffer, you want a separate plain buffer of results (the same

way M-x grep would return them), check out ag.el. Having both options is nice and they're not

mutually exclusive, so there's no reason not to install both!

Have a collection of logs to search? Turn it into a standalone Projectile project by creating a

blank .projectile file in the directory where the logs are present. You can now use all the

regular Projectile functions to search/open/etc all the files in the directory. I find this very

useful at work since we routinely get tarballs full of diagnostic information from customers. I can

easily swap between logs within the same project with C-c p b, search across the project with ag

using C-c p s s and I overwrite the binding for C-s in the projectile list buffer to search the

project with ag instead of grep with the following:

(use-package helm-projectile :ensure t :demand t ;; demand it be loaded! :bind (:map projectile-command-map ("b" . helm-projectile-switch-to-buffer) ("d" . helm-projectile-find-dir) ("f" . helm-projectile-find-file) ("p" . helm-projectile-switch-project) ("s s" . helm-projectile-ag)) :init (setq projectile-switch-project-action 'helm-projectile) (helm-projectile-on) (helm-delete-action-from-source "Grep in projects `C-s'" helm-source-projectile-projects) (helm-add-action-to-source "Ag in project C-s'" 'helm-do-ag helm-source-projectile-projects) (bind-key "C-s" (defun helm-projectile-do-ag () (interactive) (helm-exit-and-execute-action #'helm-do-ag)) helm-projectile-projects-map) :config (setq-default helm-truncate-lines t helm-projectile-truncate-lines t))

Note that you'll need to use helm-projectile for most of this functionality.

Done searching through your many log files? Hit C-c p K to kill all the project-related buffers

and clean up your list of Emacs buffers.

Filtering logs

Sometimes logs are giant and you don't care about the whole thing, sometimes you only care about messages that match (or don't match!) a particular regular expression. Emacs has nice filtering capabilities for doing that.

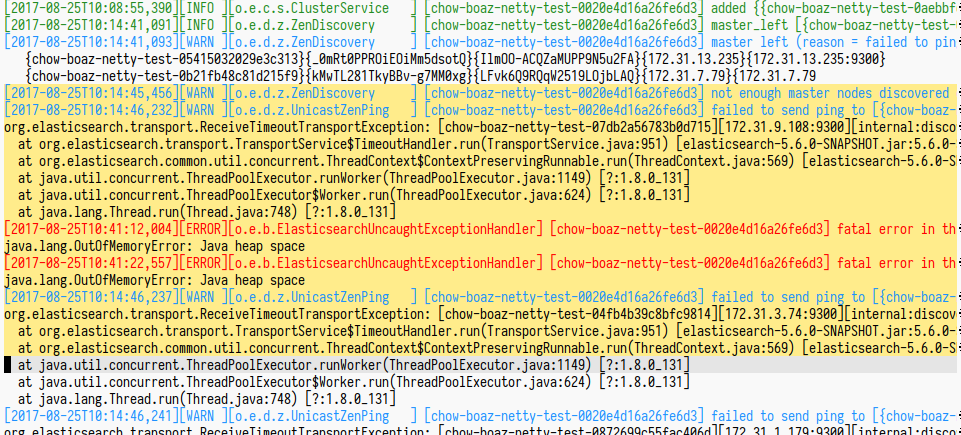

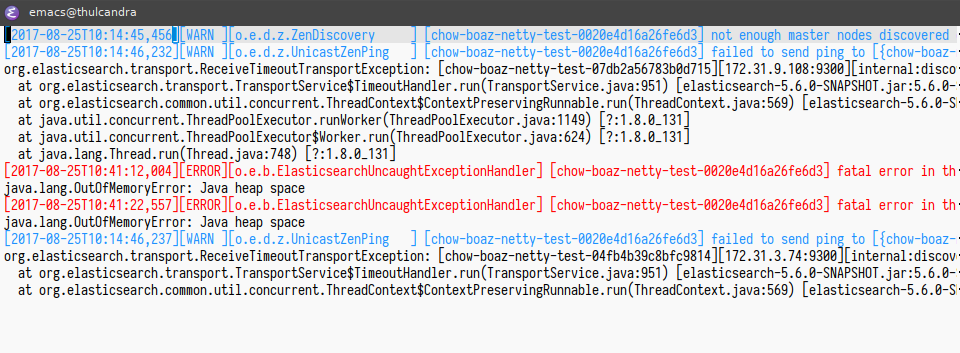

First, let's say you only want to see lines related to gc overhead in the following snippet:

[2017-08-25T10:40:19,197][WARN ][o.e.d.z.UnicastZenPing ] [chow-boaz-netty-test-0020e4d16a26fe6d3] failed to send ping to [{chow-boaz-netty-test-0e5a0b7b1b8df23c6}{9xWjsUaUThu45IGA7z4syQ}{SRuqW5T9QhCNWlF6FOzxWQ}{172.31.11.133}{172.31.11.133:9300}] org.elasticsearch.transport.ReceiveTimeoutTransportException: [chow-boaz-netty-test-0e5a0b7b1b8df23c6][172.31.11.133:9300][internal:discovery/zen/unicast] request_id [111244] timed out after [91773ms] at org.elasticsearch.transport.TransportService$TimeoutHandler.run(TransportService.java:951) [elasticsearch-5.6.0-SNAPSHOT.jar:5.6.0-SNAPSHOT] at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:569) [elasticsearch-5.6.0-SNAPSHOT.jar:5.6.0-SNAPSHOT] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_131] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_131] at java.lang.Thread.run(Thread.java:748) [?:1.8.0_131] [2017-08-25T10:41:12,004][ERROR][o.e.b.ElasticsearchUncaughtExceptionHandler] [chow-boaz-netty-test-0020e4d16a26fe6d3] fatal error in thread [elasticsearch[chow-boaz-netty-test-0020e4d16a26fe6d3][[unicast_connect]][T#22]], exiting java.lang.OutOfMemoryError: Java heap space [2017-08-25T10:41:22,557][ERROR][o.e.b.ElasticsearchUncaughtExceptionHandler] [chow-boaz-netty-test-0020e4d16a26fe6d3] fatal error in thread [elasticsearch[chow-boaz-netty-test-0020e4d16a26fe6d3][[unicast_connect]][T#15]], exiting java.lang.OutOfMemoryError: Java heap space [2017-08-25T10:39:47,126][WARN ][o.e.d.z.UnicastZenPing ] [chow-boaz-netty-test-0020e4d16a26fe6d3] failed to send ping to [{chow-boaz-netty-test-049d876c70f125f0d}{uzw2ka32TW6OFhhUhOEokw}{ERVpj26CQvGoYjTpsfZXxg}{172.31.0.143}{172.31.0.143:9300}] org.elasticsearch.transport.ReceiveTimeoutTransportException: [chow-boaz-netty-test-049d876c70f125f0d][172.31.0.143:9300][internal:discovery/zen/unicast] request_id [111284] timed out after [58879ms] at org.elasticsearch.transport.TransportService$TimeoutHandler.run(TransportService.java:951) [elasticsearch-5.6.0-SNAPSHOT.jar:5.6.0-SNAPSHOT] at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:569) [elasticsearch-5.6.0-SNAPSHOT.jar:5.6.0-SNAPSHOT] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_131] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_131] at java.lang.Thread.run(Thread.java:748) [?:1.8.0_131] [2017-08-25T10:39:46,305][WARN ][o.e.d.z.UnicastZenPing ] [chow-boaz-netty-test-0020e4d16a26fe6d3] failed to send ping to [{chow-boaz-netty-test-0a063ee638b1334bf}{pei7WBfzQVGJAEQe5Q1RFQ}{tlcPJzK_R1yTHvbNZZnffA}{172.31.15.119}{172.31.15.119:9300}] org.elasticsearch.transport.ReceiveTimeoutTransportException: [chow-boaz-netty-test-0a063ee638b1334bf][172.31.15.119:9300][internal:discovery/zen/unicast] request_id [111288] timed out after [58058ms] at org.elasticsearch.transport.TransportService$TimeoutHandler.run(TransportService.java:951) [elasticsearch-5.6.0-SNAPSHOT.jar:5.6.0-SNAPSHOT] at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:569) [elasticsearch-5.6.0-SNAPSHOT.jar:5.6.0-SNAPSHOT] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_131] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_131] at java.lang.Thread.run(Thread.java:748) [?:1.8.0_131] [2017-08-25T10:39:43,842][WARN ][o.e.d.z.UnicastZenPing ] [chow-boaz-netty-test-0020e4d16a26fe6d3] failed to send ping to [{chow-boaz-netty-test-06c66494074a1cd9a}{clPK6oWWTweGgJlxcN3Nzw}{6WsIBxfSSXO8YZjG51VCuw}{172.31.0.125}{172.31.0.125:9300}] org.elasticsearch.transport.ReceiveTimeoutTransportException: [chow-boaz-netty-test-06c66494074a1cd9a][172.31.0.125:9300][internal:discovery/zen/unicast] request_id [111299] timed out after [55573ms] at org.elasticsearch.transport.TransportService$TimeoutHandler.run(TransportService.java:951) [elasticsearch-5.6.0-SNAPSHOT.jar:5.6.0-SNAPSHOT] at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:569) [elasticsearch-5.6.0-SNAPSHOT.jar:5.6.0-SNAPSHOT] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_131] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_131] at java.lang.Thread.run(Thread.java:748) [?:1.8.0_131] [2017-08-25T10:39:45,489][WARN ][o.e.d.z.UnicastZenPing ] [chow-boaz-netty-test-0020e4d16a26fe6d3] failed to send ping to [{chow-boaz-netty-test-0f80aab837b93ff7d}{V-AJB-noRiSgmirMQcoiQw}{pgzjcwopQnKwDURP9F0xkw}{172.31.2.69}{172.31.2.69:9300}] org.elasticsearch.transport.ReceiveTimeoutTransportException: [chow-boaz-netty-test-0f80aab837b93ff7d][172.31.2.69:9300][internal:discovery/zen/unicast] request_id [111291] timed out after [57242ms] at org.elasticsearch.transport.TransportService$TimeoutHandler.run(TransportService.java:951) [elasticsearch-5.6.0-SNAPSHOT.jar:5.6.0-SNAPSHOT] at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:569) [elasticsearch-5.6.0-SNAPSHOT.jar:5.6.0-SNAPSHOT] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_131] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_131] at java.lang.Thread.run(Thread.java:748) [?:1.8.0_131] [2017-08-25T10:39:43,019][WARN ][o.e.d.z.UnicastZenPing ] [chow-boaz-netty-test-0020e4d16a26fe6d3] failed to send ping to [{chow-boaz-netty-test-0fb2df454e6db09df}{QCgt76j1SpWgH5j3C-N48w}{yQaxR83gRHmOPvek9c8yEA}{172.31.13.178}{172.31.13.178:9300}] org.elasticsearch.transport.ReceiveTimeoutTransportException: [chow-boaz-netty-test-0fb2df454e6db09df][172.31.13.178:9300][internal:discovery/zen/unicast] request_id [111339] timed out after [53927ms] at org.elasticsearch.transport.TransportService$TimeoutHandler.run(TransportService.java:951) [elasticsearch-5.6.0-SNAPSHOT.jar:5.6.0-SNAPSHOT] at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:569) [elasticsearch-5.6.0-SNAPSHOT.jar:5.6.0-SNAPSHOT] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_131] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_131] at java.lang.Thread.run(Thread.java:748) [?:1.8.0_131] [2017-08-25T10:41:29,708][INFO ][o.e.m.j.JvmGcMonitorService] [chow-boaz-netty-test-0020e4d16a26fe6d3] [gc][1991] overhead, spent [40.1s] collecting in the last [2.3m] [2017-08-25T10:40:49,262][WARN ][o.e.d.z.UnicastZenPing ] [chow-boaz-netty-test-0020e4d16a26fe6d3] failed to send ping to [{chow-boaz-netty-test-0bce3b8616d2e5ef9}{lPVY83haQ_SJi5uGeUHEcA}{v7S-N-3WQYqGQDgPfSg-ew}{172.31.7.123}{172.31.7.123:9300}] org.elasticsearch.transport.ReceiveTimeoutTransportException: [chow-boaz-netty-test-0bce3b8616d2e5ef9][172.31.7.123:9300][internal:discovery/zen/unicast] request_id [111301] timed out after [30175ms] at org.elasticsearch.transport.TransportService$TimeoutHandler.run(TransportService.java:951) [elasticsearch-5.6.0-SNAPSHOT.jar:5.6.0-SNAPSHOT] at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:569) [elasticsearch-5.6.0-SNAPSHOT.jar:5.6.0-SNAPSHOT] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_131] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_131] at java.lang.Thread.run(Thread.java:748) [?:1.8.0_131] [2017-08-25T10:39:44,672][WARN ][o.e.d.z.UnicastZenPing ] [chow-boaz-netty-test-0020e4d16a26fe6d3] failed to send ping to [{chow-boaz-netty-test-08be7641b56ba11d2}{xBmcgvMVTtqw4-eoHAXKJA}{Hbe3pMofShSERMD1CL5bKw}{172.31.14.139}{172.31.14.139:9300}] org.elasticsearch.transport.ReceiveTimeoutTransportException: [chow-boaz-netty-test-08be7641b56ba11d2][172.31.14.139:9300][internal:discovery/zen/unicast] request_id [111296] timed out after [55574ms] at org.elasticsearch.transport.TransportService$TimeoutHandler.run(TransportService.java:951) [elasticsearch-5.6.0-SNAPSHOT.jar:5.6.0-SNAPSHOT] at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:569) [elasticsearch-5.6.0-SNAPSHOT.jar:5.6.0-SNAPSHOT] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_131] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_131] at java.lang.Thread.run(Thread.java:748) [?:1.8.0_131] [2017-08-25T10:40:19,197][WARN ][o.e.d.z.UnicastZenPing ] [chow-boaz-netty-test-0020e4d16a26fe6d3] failed to send ping to [{chow-boaz-netty-test-06cccd3608a957bfe}{kklvuhaoT-KaL3UQMaiFkQ}{JVfudSosQ4e8a-8xVk8qkg}{172.31.0.91}{172.31.0.91:9300}] org.elasticsearch.transport.ReceiveTimeoutTransportException: [chow-boaz-netty-test-06cccd3608a957bfe][172.31.0.91:9300][internal:discovery/zen/unicast] request_id [111243] timed out after [91773ms] at org.elasticsearch.transport.TransportService$TimeoutHandler.run(TransportService.java:951) [elasticsearch-5.6.0-SNAPSHOT.jar:5.6.0-SNAPSHOT] at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:569) [elasticsearch-5.6.0-SNAPSHOT.jar:5.6.0-SNAPSHOT] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_131] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_131] at java.lang.Thread.run(Thread.java:748) [?:1.8.0_131] [2017-08-25T10:39:44,672][WARN ][o.e.d.z.UnicastZenPing ] [chow-boaz-netty-test-0020e4d16a26fe6d3] failed to send ping to [{chow-boaz-netty-test-03defe155b12bb46b}{t3E9wy2WQSahl2rnkGuB8w}{sWLJo6flQm2Ens2xS_wSrQ}{172.31.3.117}{172.31.3.117:9300}] org.elasticsearch.transport.ReceiveTimeoutTransportException: [chow-boaz-netty-test-03defe155b12bb46b][172.31.3.117:9300][internal:discovery/zen/unicast] request_id [111295] timed out after [56424ms] at org.elasticsearch.transport.TransportService$TimeoutHandler.run(TransportService.java:951) [elasticsearch-5.6.0-SNAPSHOT.jar:5.6.0-SNAPSHOT] at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:569) [elasticsearch-5.6.0-SNAPSHOT.jar:5.6.0-SNAPSHOT] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_131] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_131] at java.lang.Thread.run(Thread.java:748) [?:1.8.0_131]

If you do a M-x keep-lines and then type memory\|gc\|error the log will then become:

[2017-08-25T10:41:12,004][ERROR][o.e.b.ElasticsearchUncaughtExceptionHandler] [chow-boaz-netty-test-0020e4d16a26fe6d3] fatal error in thread [elasticsearch[chow-boaz-netty-test-0020e4d16a26fe6d3][[unicast_connect]][T#22]], exiting java.lang.OutOfMemoryError: Java heap space [2017-08-25T10:41:22,557][ERROR][o.e.b.ElasticsearchUncaughtExceptionHandler] [chow-boaz-netty-test-0020e4d16a26fe6d3] fatal error in thread [elasticsearch[chow-boaz-netty-test-0020e4d16a26fe6d3][[unicast_connect]][T#15]], exiting java.lang.OutOfMemoryError: Java heap space [2017-08-25T10:41:29,708][INFO ][o.e.m.j.JvmGcMonitorService] [chow-boaz-netty-test-0020e4d16a26fe6d3] [gc][1991] overhead, spent [40.1s] collecting in the last [2.3m]

Everything that didn't match the regular expression was removed. Keep in mind that this only works on the lines below the current point, so if you want to remove everything, you'll need to be at the top of the file (or not, depending on what you want to remove)

On the other side of the spectrum is flush-lines, this removes lines that match the regular

expression, so with the same original log, if we did M-x flush-lines and then ^org\|.+at[[:space:]]

[2017-08-25T10:40:19,197][WARN ][o.e.d.z.UnicastZenPing ] [chow-boaz-netty-test-0020e4d16a26fe6d3] failed to send ping to [{chow-boaz-netty-test-0e5a0b7b1b8df23c6}{9xWjsUaUThu45IGA7z4syQ}{SRuqW5T9QhCNWlF6FOzxWQ}{172.31.11.133}{172.31.11.133:9300}] [2017-08-25T10:41:12,004][ERROR][o.e.b.ElasticsearchUncaughtExceptionHandler] [chow-boaz-netty-test-0020e4d16a26fe6d3] fatal error in thread [elasticsearch[chow-boaz-netty-test-0020e4d16a26fe6d3][[unicast_connect]][T#22]], exiting java.lang.OutOfMemoryError: Java heap space [2017-08-25T10:41:22,557][ERROR][o.e.b.ElasticsearchUncaughtExceptionHandler] [chow-boaz-netty-test-0020e4d16a26fe6d3] fatal error in thread [elasticsearch[chow-boaz-netty-test-0020e4d16a26fe6d3][[unicast_connect]][T#15]], exiting java.lang.OutOfMemoryError: Java heap space [2017-08-25T10:39:47,126][WARN ][o.e.d.z.UnicastZenPing ] [chow-boaz-netty-test-0020e4d16a26fe6d3] failed to send ping to [{chow-boaz-netty-test-049d876c70f125f0d}{uzw2ka32TW6OFhhUhOEokw}{ERVpj26CQvGoYjTpsfZXxg}{172.31.0.143}{172.31.0.143:9300}] [2017-08-25T10:39:46,305][WARN ][o.e.d.z.UnicastZenPing ] [chow-boaz-netty-test-0020e4d16a26fe6d3] failed to send ping to [{chow-boaz-netty-test-0a063ee638b1334bf}{pei7WBfzQVGJAEQe5Q1RFQ}{tlcPJzK_R1yTHvbNZZnffA}{172.31.15.119}{172.31.15.119:9300}] [2017-08-25T10:39:43,842][WARN ][o.e.d.z.UnicastZenPing ] [chow-boaz-netty-test-0020e4d16a26fe6d3] failed to send ping to [{chow-boaz-netty-test-06c66494074a1cd9a}{clPK6oWWTweGgJlxcN3Nzw}{6WsIBxfSSXO8YZjG51VCuw}{172.31.0.125}{172.31.0.125:9300}] [2017-08-25T10:39:45,489][WARN ][o.e.d.z.UnicastZenPing ] [chow-boaz-netty-test-0020e4d16a26fe6d3] failed to send ping to [{chow-boaz-netty-test-0f80aab837b93ff7d}{V-AJB-noRiSgmirMQcoiQw}{pgzjcwopQnKwDURP9F0xkw}{172.31.2.69}{172.31.2.69:9300}] [2017-08-25T10:39:43,019][WARN ][o.e.d.z.UnicastZenPing ] [chow-boaz-netty-test-0020e4d16a26fe6d3] failed to send ping to [{chow-boaz-netty-test-0fb2df454e6db09df}{QCgt76j1SpWgH5j3C-N48w}{yQaxR83gRHmOPvek9c8yEA}{172.31.13.178}{172.31.13.178:9300}] [2017-08-25T10:41:29,708][INFO ][o.e.m.j.JvmGcMonitorService] [chow-boaz-netty-test-0020e4d16a26fe6d3] [gc][1991] overhead, spent [40.1s] collecting in the last [2.3m] [2017-08-25T10:40:49,262][WARN ][o.e.d.z.UnicastZenPing ] [chow-boaz-netty-test-0020e4d16a26fe6d3] failed to send ping to [{chow-boaz-netty-test-0bce3b8616d2e5ef9}{lPVY83haQ_SJi5uGeUHEcA}{v7S-N-3WQYqGQDgPfSg-ew}{172.31.7.123}{172.31.7.123:9300}] [2017-08-25T10:39:44,672][WARN ][o.e.d.z.UnicastZenPing ] [chow-boaz-netty-test-0020e4d16a26fe6d3] failed to send ping to [{chow-boaz-netty-test-08be7641b56ba11d2}{xBmcgvMVTtqw4-eoHAXKJA}{Hbe3pMofShSERMD1CL5bKw}{172.31.14.139}{172.31.14.139:9300}] [2017-08-25T10:40:19,197][WARN ][o.e.d.z.UnicastZenPing ] [chow-boaz-netty-test-0020e4d16a26fe6d3] failed to send ping to [{chow-boaz-netty-test-06cccd3608a957bfe}{kklvuhaoT-KaL3UQMaiFkQ}{JVfudSosQ4e8a-8xVk8qkg}{172.31.0.91}{172.31.0.91:9300}] [2017-08-25T10:39:44,672][WARN ][o.e.d.z.UnicastZenPing ] [chow-boaz-netty-test-0020e4d16a26fe6d3] failed to send ping to [{chow-boaz-netty-test-03defe155b12bb46b}{t3E9wy2WQSahl2rnkGuB8w}{sWLJo6flQm2Ens2xS_wSrQ}{172.31.3.117}{172.31.3.117:9300}]

If you only care about how many occurrences of something is in the file, you can use how-many,

so if I wanted to know how many failed pings there were in the file, I would do M-x how-many and

then type failed to send ping, and then I'll get back in the modeline:

10 occurrences

If you have trouble with the regular expressions, don't forget that M-x regexp-builder is your

friend!

Grab bag

Here are some things that don't really fit into searching and filtering, but are still useful when dealing with log files.

Highlighting pieces of text in a file

Sometimes you want to permanently highlight something, so that it sticks out to you when you're parsing through a particular log. There's a nice package for this called hl-anything. I configure it like the following6:

(use-package hl-anything :ensure t :diminish hl-highlight-mode :commands hl-highlight-mode :init (global-set-key (kbd "<f7> <f7>") 'hl-highlight-thingatpt-local) (global-set-key (kbd "<f7> u") 'hl-unhighlight-all-local) (global-set-key (kbd "<f7> U") 'hl-unhighlight-all-global) (global-set-key (kbd "<f7> n") 'hl-find-next-thing) (global-set-key (kbd "<f7> p") 'hl-find-prev-thing))

If you enable this by doing M-x hl-highilght-mode you can then hit F7 F7 to highlight either the

region or the thing at point everywhere in the local file. If you're on the point of a highlighted

thing, you can jump to the next one with F7 n or F7 p for the previous one. Multiple highlights

are also supported. I find this to be very helpful when you find a phrase in a log that you want to

jump around between, and have it stand out whenever you come across it. There are some concerns

about the speed however7, so YMMV.

Pop up a shell

Sometimes you just need a shell where you are, so you can do something with sed/awk/etc, so I have a

couple of helpers, eshell-here and shell-here that I bind to C-! and C-M-! respectively. I

generally use the eshell one since it doesn't spawn an actual shell and eshell is my primary shell.

(defun eshell-here () "Opens up a new shell in the directory associated with the current buffer's file. The eshell is renamed to match that directory to make multiple eshell windows easier." (interactive) (let* ((parent (if (buffer-file-name) (file-name-directory (buffer-file-name)) default-directory)) (height (/ (window-total-height) 3)) (name (car (last (split-string parent "/" t))))) (split-window-vertically (- height)) (other-window 1) (eshell "new") (rename-buffer (concat "*eshell: " name "*")) (insert (concat "ls")) (eshell-send-input))) (defun shell-here () "Opens up a new shell in the directory associated with the current buffer's file. The shell is renamed to match that directory to make multiple shell windows easier." (interactive) ;; `shell' already handles splitting, so don't do the same thing as ;; `eshell-here'. (shell "new") (rename-buffer (concat "*shell: " name "*"))) (global-set-key (kbd "C-!") #'eshell-here) (global-set-key (kbd "C-M-!") #'shell-here)

And some nice glue for quickly closing eshell windows (you'll need to exit regular shell buffers however you usually do).

(defun eshell/x () "Closes the EShell session and gets rid of the EShell window." (delete-window) (eshell/exit))

Sharing snippets of logs with others

Finally, as the last grab bag thing, I'd like to share my favorite pasting site, http://termbin.com/

So, why is it better than other termbin sites? Well. First off, pastes are plain text - no fancy highlighting, Javascript8, "social" features, or other nonsense when all you actually want is to read the text.

Second, you don't go to a site to paste to it, you use Netcat. Available on Linux, OSX, and Windows systems, sending logs is as easy as

$ cat my_file.log | nc termbin.com 9999

Termbin will spit back a URL, which you can curl directly to get the text without any extraneous text.

You can easily send things from Emacs straight to Termbin by doing the following:

- Highlight the region of text you'd like to paste

- Hit

M-|to invokeshell-command-on-region - Type

nc termbin.com 9999 - Hit Enter, Emacs will send the text to Termbin and spit back the URL (check

*Messages*if you missed the URL)

Voilà - text pasted and you have a nice link to share. Pastes aren't stored forever (check the Termbin site for the full details), but for quick sharing it can't be beat.

Bonus Tmux config

I understand if you're on a remote machine, you might not have access to Emacs, and piping into nc

may be hard for output that has already scrolled by. So, to help with this, you can have Tmux send

whatever is in its internal clipboard directly to Termbin. I have a handy little configuration in

~/.tmux.conf for this that looks like this:

# termbin paste bind-key P run-shell 'tmux saveb -| nc termbin.com 9999'

Highlight text with Tmux's scrollback (my bind-key for Tmux is C-z so I use C-z [, select the

text with C-SPC, and then hit C-w to copy it into Tmux's internal clipboard).

Once it's in Tmux's clipboard, I hit C-z P (again, C-z is my bind-key, the default is C-b so

which you use may differ) and Tmux will paste the text to Termbin and give me the URL.

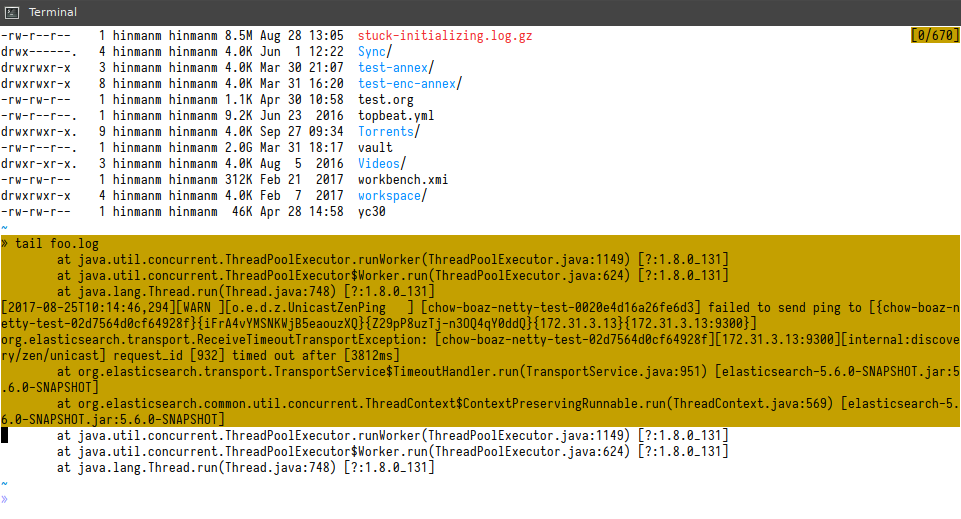

Here's a couple of screenshots that hopefully explain what I mean

Figure 7: Selecting text in Tmux's scrollback mode

Figure 8: After pasting the text to Termbin with C-z P

Other things that might be helpful

Just a couple shout-outs, whether or not I use them.

- beacon-mode

- Helpful if you frequently lose track of where your cursor is, as it gives visual indication whenever you move around.

- json-snatcher

- Inside of a deeply nested JSON tree, this can tell you the path to get to where you are, so you can use it with a different tool.

- split logs by time or node

- You'd have to know logstash to use this, but if you do, you can split logs by time or node with it.

- itail

- An interactive tail mode for filtering with unix pipes and highlighting.

Feel free to let me know if you have any other suggestions (contact details in the Conclusion) and I'll add them here!

Conclusion

And that's it! I hope this was helpful to anyone that views log files in Emacs. Working with logs is really a subset of regular editing in Emacs, so these things are still applicable in your regular day-to-day editing.

Remember: don't let anyone tell you that you can't do it in Emacs.

If you have any suggestions, corrections, or anything else, feel free to send me an email at lee at

this domain, or send a message to @thnetos.